Senegal unveils report on WWII massacre by French colonial armyFri, 17 Oct 2025 01:38:04 GMT

Investigations into one of the worst massacres in France’s colonial history took a step forward on Thursday when researchers presented an official report to Senegalese President Bassirou Diomaye Faye.The document aims to clarify events in 1944 when the French colonial army in Senegal massacred African troops who had fought alongside them in World War II.Even …

After traveling alone to US, Guatemalan teens fear deportation

At age 15, I.B. fled poverty and a father who abused her in Guatemala. She emigrated without her parents to the United States — like hundreds of children Donald Trump’s administration recently tried to deport.Between October 2024 and August 2025, 28,867 unaccompanied minors entered the United States — a 70 percent drop from the previous period, according to US Customs and Border Patrol.Hundreds are Guatemalans from impoverished indigenous communities, as shown by court documents recently obtained by AFP.I.B. entered the United States in September 2024 and was sent to live with a foster family in Connecticut by the Office of Refugee Resettlement (ORR), a US government agency that handles cases of unaccompanied minors.”I had to leave Guatemala because of all my suffering there,” a court document quotes her as saying. “There were times when we had no food, and sometimes I had to eat food from dumpsters to survive.””My father was not part of my life since I was very young, and during one of the few times I saw him, he abused me,” she added.In August, immigration officers asked her if she had any family in her home country.”No one asked me if I was afraid to go back to Guatemala, which I am.”- ‘Get ready’ -I.B. is represented by the National Immigration Law Center (NILC), an NGO that blocked the deportation of 76 unaccompanied Guatemalan minors from an airport in Harlingen, Texas on August 31.Another minor referred to as F.O.Y.P. was in that group.”At about 1 o’clock in the morning, they arrived in my room and told me they were going to be transporting me out of the shelter. They gave me only about 20 to 30 minutes to get ready,” the 17-year-old said.It was not clear where he was being taken, but “finally, they told us that we were all going to be going back to Guatemala.”He was taken to an airport where a group of 76 teens waited for four hours on busses and four more in an airplane.Eventually they were taken off the plane and, according to court testimony, returned to shelters.Their deportation was blocked by a judge who issued an emergency injunction, saying it is illegal to deport unaccompanied children when an immigration judge has not ruled on their cases.In mid-September, a federal judge in Washington, Timothy Kelly, extended the block. The administration of President Donald Trump has yet to appeal. The halted deportation is a victory not only for the Guatemalan teens taken off the plane, but also for other unaccomanied minors “for whom the court also concluded that attempts to expel them without the protections of the law would likely be unlawful,” said Mary McCord of the Institute for Constitutional Advocacy and Protection at Georgetown University in Washington.According to the US government, 327 Guatemalan children older than 14 qualify to be returned to their country of origin under a bilateral accord. Guatemala’s government says the number is more than 600.- ‘I do not want to go back’ -The US Department of Homeland Security maintains the minors should be with their families, but Judge Kelly found that was not necessarily what the families wanted.”There is no evidence before the Court that the parents of these children sought their return,” Kelly wrote. “To the contrary, the Guatemalan Attorney General reports that officials could not even track down parents for most of the children whom Defendants found eligible for their reunification.”Guatemalan President Bernardo Arevalo said the decision to repatriate the minors was based on fears that once they turned 18 they could be removed from shelters and placed in Immigration and Customs Enforcement (ICE) detention centers.”We will be happy to accept any unaccompanied child who is able to return voluntarily or by court order,” he said.What do the kids themselves have to say?”Here in the US, I live with my foster family who treats me well and supports me…I do not want to go back to Guatemala,” I.B. said.Another teen, identified as M.A.L.R., said that on August 29 a judge informed her that her name was on a list of Guatemalan children who wanted to return home. But she did not.When she was taken from her foster family and put on a bus, she felt sick and feverish and almost vomited. M.A.L.R. fled Guatemala at age 15 after she and her family received death threats from a man whose advances she had rebuffed. B.M.R.P., her mother, said she had never been contacted by the government in Guatemala or the United States. “I also never told anyone I wanted M. to return. I think she is in danger if she does return to Guatemala,” court documents quote her as saying.”All I ask is that you help my daughter stay safe — help her stay safe by not returning her to Guatemala.”

Trump critic John Bolton indicted for mishandling classified info

John Bolton, Donald Trump’s former national security advisor, was indicted on Thursday — the third foe of the US president to be hit with criminal charges in recent weeks.The 76-year-old veteran diplomat was charged by a federal grand jury in Maryland with 18 counts of transmitting and retaining classified information.The 26-page indictment accuses Bolton of sharing top secret documents by email with two “unauthorized individuals” who are not identified but are believed to be his wife and daughter.It says he shared more than 1,000 pages of “diary-life” entries about his work as national security advisor via non-government email or a messaging app.The Justice Department said the documents “revealed intelligence about future attacks, foreign adversaries, and foreign-policy relations.”Each of the counts carries a maximum sentence of 10 years in prison. “Anyone who abuses a position of power and jeopardizes our national security will be held accountable. No one is above the law,” Attorney General Pam Bondi said in a statement.In a statement to US media, Bolton refuted the charges and said he had “become the latest target in weaponizing the Justice Department… with charges that were declined before or distort the facts.”Asked for his reaction to Bolton’s indictment, Trump told reporters his former aide is a “bad guy” and “that’s the way it goes.”- Trump critics in legal jeopardy -Bolton’s indictment follows the filing of criminal charges by the Justice Department against two other prominent critics of the Republican president — New York Attorney General Letitia James and former FBI director James Comey.The 66-year-old James was indicted by a grand jury in Virginia on October 9 on charges of bank fraud and making false statements related to a property she purchased in 2020 in Norfolk, Virginia.James, who successfully prosecuted Trump for financial fraud, has rejected the charges as “baseless” and described them as “political retribution.”Comey, 64, pleaded not guilty on October 8 to charges of making false statements to Congress and obstructing a congressional proceeding.His lawyer has said he will seek to have the case thrown out on the grounds that it is a vindictive and selective prosecution.Trump recently publicly urged Bondi in a social media post to take action against James, Comey and others he sees as enemies, in an escalation of his campaign against political opponents.Trump did not specifically mention Bolton in the Truth Social post, but he has lashed out at his former advisor in the past and withdrew his security detail shortly after returning to the White House in January.- ‘Unfit to be president’ -A longtime critic of the Iranian regime, Bolton was a national security hawk and has received death threats from Tehran.As part of the investigation into Bolton, FBI agents raided his Maryland suburban home and his Washington office in August.Bolton served as Trump’s national security advisor in his first term and later angered the administration with the publication of a highly critical book, “The Room Where It Happened.”He has since become a highly visible and pugnacious detractor of Trump, frequently appearing on television news shows and in print to condemn the man he has called “unfit to be president.”Since January, Trump has taken a number of punitive measures against perceived enemies, purging government officials he deemed to be disloyal, targeting law firms involved in past cases against him and pulling federal funding from universities.After Trump left the White House in 2021, James brought a major civil fraud case against him, alleging he and his real estate company had inflated his wealth and manipulated the value of properties to obtain favorable bank loans or insurance terms.A New York state judge ordered Trump to pay $464 million, but a higher court removed the financial penalty while upholding the underlying judgment.The cases against James and Comey were filed by Trump’s handpicked US attorney, Lindsey Halligan, after the previous federal prosecutor resigned, saying there was not enough evidence to charge them.Appointed to head the Federal Bureau of Investigation by then-president Barack Obama in 2013, Comey was fired by Trump in 2017 amid the probe into whether any members of the Trump presidential campaign had colluded with Moscow to sway the 2016 election.Trump was accused of mishandling classified documents after leaving the White House and plotting to overturn the results of the 2020 election.Neither case came to trial, and special counsel Jack Smith — in line with a Justice Department policy of not prosecuting a sitting president — dropped them both after Trump won the November 2024 presidential election.

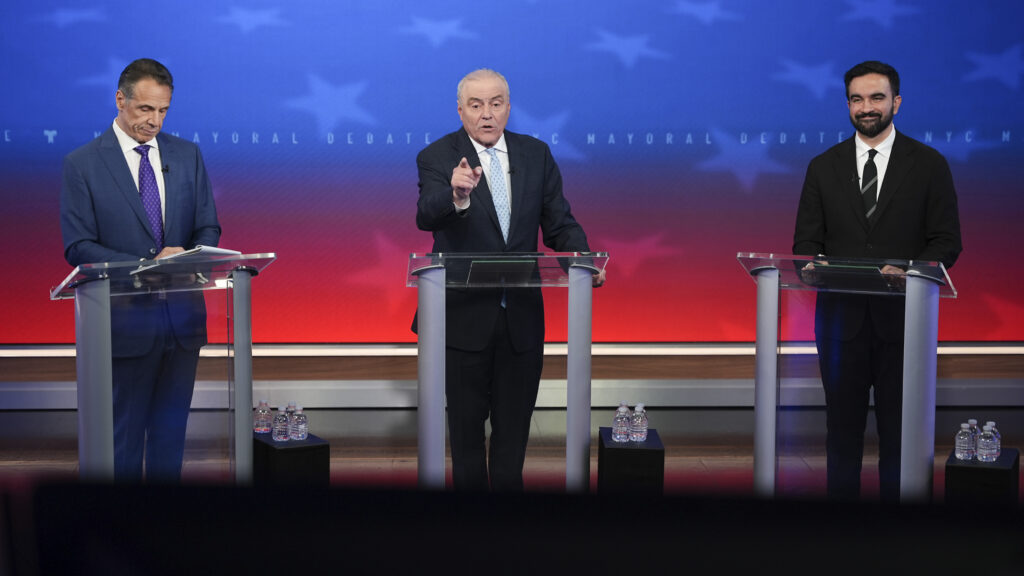

NY mayoral hopefuls clash in high-stakes debate

A socialist, an accused molester and a vigilante all hoping to be New York’s next mayor clashed in a debate with “high levels of testosterone” Thursday as the unpredictable campaign enters the homestretch.Democratic candidate and frontrunner Zohran Mamdani, independent former New York governor Andrew Cuomo and Republican Curtis Sliwa pitched to voters in the first of two televised debates ahead of the November 4 election. Early voting begins on October 25.Mamdani attacked Cuomo for his alleged sexual misconduct and controversial governing record “sending seniors to their death in nursing homes” during the Covid pandemic.”Thank God I’m not a professional politician because they have created the crime crisis in this city,” Sliwa said, gesturing at his two rivals.”There’s high levels of testosterone in this room,” he said later.Mamdani pulled off a stunning upset in the Democratic Party primary, defeating political scion Cuomo who had been the favorite for weeks, becoming the party’s official nominee.Mamdani has promised free bus services, rent freezes and city-run supermarkets, which Cuomo has panned as fanciful and unaffordable government overreach.The race to govern the city’s 8.5 million people was again upended when sitting Mayor Eric Adams, who has been engulfed in corruption allegations, quit the race without endorsing another candidate.Cuomo, 67, was the state governor from 2011 until 2021, when he resigned over sexual assault allegations.Mamdani, 33, is a state lawmaker for the city borough of Queens and has run an insurgent grass-roots campaign that has motivated young New Yorkers at a high rate.- ‘Take on Trump’ -Trump has threatened to withhold federal funds from Mamdani’s administration if he is elected, calling him a “communist.”But Mamdani said “I would make it clear to the president that I am willing to not only speak to him, but to work with him, if it means delivering on lowering the cost of living for New York.”Cuomo warned “Trump will take over New York City, and it will be Mayor Trump” if Mamdani won — mirroring the takeover of much of the administration of the capital Washington.Trump said Wednesday he had “terminated” the $16 billion Hudson Gateway tunnel linking New York to New Jersey, a years-long megaproject. Asked in the debate for his dream news headline, Mamdani said it would be “Mamdani continues to take on Trump.”Quinnipiac University polling suggests most voters will not have their minds changed by the TV debate with just 18 percent of Mamdani and Cuomo’s supporters “not likely” to alter their pick, compared to 24 percent of Sliwa’s backers.In the latest polling Sliwa, a 71-year-old who founded the Guardian Angels vigilante group in 1979, is trailing a distant third with 15 percent in the most recent poll, behind Cuomo’s 33 percent and Mamdani’s 46 percent.Sliwa insisted he would not bow to inducements he alleged were arranged by Cuomo — who denies the claim — to quit the race, like lucrative jobs with fat salaries and a driver.”I said, ‘Hey, this is not only unethical, it’s bribery, and it could be criminal,” Sliwa told AFP ahead of the showdown.One of the most acrimonious exchanges in the debate, held without an audience, centered on the safety of New York’s significant Jewish community.Cuomo accused Mamdani of not condemning Hamas and endorsing an epithet he claimed meant death to all Jews globally, while Sliwa accused both of being soft on hate crimes because of their endorsement of cash bail.”Why would (Mamdani) not condemn Hamas? He still won’t denounce ‘globalize the intifada,’ which means kill all Jews,” Cuomo said, drawing an instant rejection from Mamdani.Sliwa pointed to his leadership of a vigilante group saying he had “been there for all people at all times for 46 years as leader of the Guardian Angels here and around the world.”A second debate will be held on October 22.

Les grandes banques américaines ne sont plus tenues de jauger le risque climatique

Les grandes banques américaines ne sont plus tenues de porter une attention particulière aux risques liés au changement climatique qui pèsent sur leur activité, ont annoncé jeudi les agences de régulation des Etats-Unis, dont la Fed.”Bon débarras”, a commenté en deux mots un des responsables de la banque centrale des Etats-Unis, le gouverneur Christopher Waller, dans un communiqué.M. Waller est l’une des personnes pressenties pour prendre la tête de l’institution quand le mandat de Jerome Powell s’achèvera, au printemps prochain. Il doit pour cela être désigné par le président américain Donald Trump, climatosceptique assumé.En octobre 2023, une série de recommandations avaient été publiées pour que les banques se penchent sur les risques climatiques qui peuvent faire vaciller leur activité. La multiplication, par exemple, des incendies, inondations ou ouragans dévastateurs représentent un coût assurantiel élevé.Ces recommandations avaient été adressées aux banques ayant plus de 100 milliards de dollars d’actifs.- “Courte vue” -Les agences de régulation américaines “estiment que les principes de gestion des risques financiers liés au climat ne sont pas nécessaires, car les normes de sécurité et de robustesse existantes imposent à toutes les institutions supervisées de mettre en place une gestion des risques efficace, adaptée à leur taille, à leur complexité et à leurs activités”, selon leur communiqué commun.”En outre, toutes les institutions supervisées sont tenues d’examiner et de traiter de manière appropriée tous les risques financiers importants et doivent être résilientes face à toute une série de risques, y compris les risques émergents”, est-il ajouté. Dans le détail, au conseil des gouverneurs de la Fed, cinq membres ont voté pour le retrait des recommandations, une s’est abstenue (la gouverneure Lisa Cook) et un a voté contre. Il s’agit de Michael Barr, l’ancien vice-président de la Fed chargé de la régulation bancaire qui avait porté ce changement, et avait annoncé sa démission de ce poste quelques jours avant le retour au pouvoir de Donald Trump en janvier. Il est resté gouverneur.Dans un communiqué séparé, M. Barr a regretté une décision “de courte vue qui rendra le système financier plus risqué alors même que les risques financiers liés au climat augmentent”.Sa remplaçante, Michelle Bowman, avait fait part de ses fortes réserves.”Ces directives ont eu pour effet de semer la confusion quant aux attentes des autorités de surveillance et d’augmenter les coûts et les charges liés à la conformité, sans améliorer de manière proportionnelle la sécurité et la robustesse des institutions financières ni la stabilité financière des États-Unis”, a-t-elle soutenu dans son propre communiqué.

Gaza: la Turquie envoie des équipes pour rechercher des dépouilles d’otages, avertissement de Trump

La Turquie a commencé à déployer des spécialistes jeudi pour aider à la recherche des corps ensevelis sous les décombres à Gaza, alors que Donald Trump a lancé un avertissement au Hamas après une série d’exécutions menées dans le territoire palestinien. Le président américain Donald Trump, à l’origine du plan destiné à mettre fin à deux ans de guerre, a menacé jeudi “d’aller tuer” les membres du Hamas si ce dernier “n’arrêtait pas de tuer des gens” à Gaza.Depuis l’arrêt des combats, le mouvement islamiste palestinien a étendu sa présence dans la bande de Gaza en ruines et revendiqué mardi dans une vidéo l’exécution d’hommes présentés comme des “collaborateurs” d’Israël.Le Hamas a réaffirmé “son engagement” envers la “mise en oeuvre” de l’accord de cessez-le-feu à Gaza négocié avec Israël sous l’égide des États-Unis, et s’est à nouveau engagé à “remettre tous les corps restants” des otages. Israël accuse le Hamas de violer l’accord de cessez-le-feu entré en vigueur le 10 octobre, qui prévoyait un retour de tous les otages, vivants et morts, avant lundi matin.Dans le cadre de l’accord de cessez-le-feu, le Hamas a libéré dans les temps les 20 derniers otages vivants retenus dans la bande de Gaza, mais n’a remis depuis lundi que neuf dépouilles sur les 28 qu’il retient. Le Hamas soutient que ce sont les seuls corps auxquels il a pu accéder, disant avoir besoin d’un “équipement spécial” pour récupérer les autres dépouilles.Jeudi, la Turquie a annoncé envoyer des spécialistes pour participer aux recherches des corps ensevelis, “y compris des otages”. Quelque 80 de ces secouristes habitués des terrains difficiles, notamment des tremblements de terre, se trouvent déjà sur place, selon les autorités turques. Le Premier ministre israélien Benjamin Netanyahu s’est à nouveau dit jeudi “déterminé” à ramener “tous les otages”, lors de la commémoration officielle du deuxième anniversaire de l’attaque sans précédent du Hamas le 7 octobre 2023 sur le sol israélien, qui a déclenché la guerre ayant fait des dizaines de milliers de morts à Gaza.Il est sous pression des familles des otages, qui l’ont appelé “à cesser immédiatement la mise en œuvre de toute autre étape de l’accord” initié par le président américain Donald Trump tant que tous les corps ne sont pas rendus. La veille, son ministre de la Défense Israël Katz a menacé de reprendre l’offensive, “en coordination avec les États-Unis”, si “le Hamas refuse de respecter l’accord”.- “Ils creusent” -Donald Trump avait semblé appeler à la patience mercredi : “C’est un processus macabre (…) mais ils creusent, ils creusent vraiment” et “trouvent beaucoup de corps”, avait-il affirmé, interrogé par des journalistes sur le sujet.En échange du retour des dépouilles d’otages, Israël a remis au total 120 corps de Palestiniens, dont 30 jeudi à Gaza, selon le ministère de la Santé du Hamas. Les accès à Gaza – tous contrôlés par Israël – restent très restreints. Après le cessez-le-feu et la libération des otages, Israël doit en principe ouvrir à l’aide humanitaire le point de passage crucial de Rafah, entre l’Egypte et le territoire palestinien.Le chef de la diplomatie israélienne Gideon Saar a annoncé jeudi qu’il ouvrirait “probablement dimanche”.Fin août, l’ONU, qui réclame l’ouverture immédiate de tous les points de passage, a déclaré une famine dans plusieurs zones de Gaza, ce que conteste Israël.De retour dans les ruines de Gaza-ville, plusieurs habitants installent des tentes ou des abris de fortune au milieu des décombres, selon des images de l’AFP.”Nous sommes jetés à la rue. Il n’y a pas d’eau, pas de nourriture, pas d’électricité. Rien. Toute la ville de Gaza a été réduite en cendres”, déclare Mustafa Mahram.Le plan de Donald Trump, qui vise à mettre fin définitivement à la guerre dans la bande de Gaza, prévoit dans une première phase le cessez-le-feu, la libération des otages, un retrait israélien de plusieurs secteurs et l’acheminement de plus d’aide humanitaire dans le territoire dévasté.Puis, dans une étape ultérieure, notamment le désarmement du Hamas et l’amnistie ou l’exil de ses combattants et la poursuite du retrait israélien, des points qui restent sujets à discussion.L’attaque du 7 octobre a entraîné du côté israélien la mort de 1.221 personnes, en majorité des civils, selon un bilan établi par l’AFP à partir de données officielles.La campagne de représailles israélienne a fait 67.967 morts à Gaza, en majorité des civils, selon les chiffres du ministère de la Santé du Hamas.

Turkish experts to help find bodies in Gaza, as Trump warns Hamas

Turkey has deployed dozens of disaster relief specialists to help search for bodies under the mountains of rubble in Gaza, as US President Donald Trump fired a warning at Hamas Thursday over a spate of recent killings in the territory.Trump characterised the killings as a breach of the ceasefire deal he spearheaded, under which the Palestinian militant group returned its last 20 surviving hostages to Israel.Hamas says it has also handed back all the bodies of deceased captives it can access but the bodies of 19 more are still unaccounted for and believed to be buried under the ruins alongside an untold number of Palestinians.The Palestinian militants stressed their “commitment” to the ceasefire deal with Israel, and that they want to return all the remaining bodies of hostages left in Gaza.But it said in a statement that the process “may require some time, as some of these corpses were buried in tunnels destroyed by the occupation, while others remain under the rubble of buildings it bombed and demolished”.Turkey has sent staff from its disaster relief agency to help in locating the bodies but the families of the dead have fumed at Hamas’s failure to deliver their loved ones’ remains.The main campaign group advocating for the hostages’ families demanded Thursday that Israel “immediately halt the implementation of any further stages of the agreement as long as Hamas continues to blatantly violate its obligations regarding the return of all hostages and the remains of the victims”.Prime Minister Benjamin Netanyahu reaffirmed his determination to “secure the return of all hostages” after his defence minister warned on Wednesday that Israel “will resume fighting” if Hamas failed to do so.Trump had appeared to call for patience when it came to the bodies’ return — insisting Hamas was “actually digging” for hostages’ remains — but later expressed frustration on Thursday with the group’s conduct since the fighting halted.”If Hamas continues to kill people in Gaza, which was not the Deal, we will have no choice but to go in and kill them,” Trump said on Truth Social in an apparent reference to recent shootings of Palestinian civilians.Hamas has been accused of carrying out summary executions in Gaza since the ceasefire went into effect. Clashes have also taken place between the group’s various security units and armed Palestinian clans, some of which are alleged to have Israeli backing.- Aid hopes -The ceasefire deal has so far seen the war grind to a halt after two years of agony for the hostages’ families, and constant bombardment and hunger for Gazans.According to Trump’s 20-point plan for Gaza, the next phases of the truce should include the disarmament of Hamas, the offer of amnesty to Hamas leaders who decommission their weapons and establishing the governance of post-war Gaza.The plan also calls for renewed aid provision, with international organisations awaiting the reopening of southern Gaza’s Rafah crossing in the hope it will enable a surge of supplies.Israeli Foreign Minister Gideon Saar said on the sidelines of a summit in Naples that preparations were being made for the strategic crossing, and that he “hoped” it would reopen Sunday, Italian news agencies reported.Israel, however, said earlier on Thursday that the crossing would only be open to people, not aid, and Saar did not appear to elaborate, according to the reports.The humanitarian situation has been dire in Gaza throughout the war, with the UN declaring famine in parts of the north in August.The World Health Organization has warned that infectious diseases are “spiralling out of control”, with only 13 of the territory’s 36 hospitals even partially functioning.”Whether meningitis… diarrhoea, respiratory illnesses, we’re talking about a mammoth amount of work,” Hanan Balkhy, regional director for the UN health body, told AFP in Cairo. – ‘My children are home’ -The families of the surviving hostages were able, after two long years without their loved ones, to rejoice in their return.”My children are home! Two years ago, one morning, I lost half of my family,” said Sylvia Cunio, mother of Ariel and David Cunio, who were released from captivity.Israel returned the bodies of 30 Palestinians to Gaza on Thursday, the territory’s health ministry said.Under the ceasefire deal, Israel was to turn over the bodies of 15 Palestinians for every deceased Israeli returned.For many in Gaza, while there was relief that the bombing had stopped, the road to recovery felt impossible, given the sheer scale of the devastation.”There’s no water — no clean water, not even salty water, no water at all. No essentials of life exist — no food, no drink, nothing,” said Mustafa Mahram, who returned to Gaza City after the ceasefire.”As you can see, all that’s left is rubble.” The war has killed at least 67,967 people in Gaza, according to the health ministry in the Hamas-run territory, figures the United Nations considers credible.The data does not distinguish between civilians and combatants but indicates that more than half of the dead are women and children.Hamas’s October 7 attack on Israel resulted in the deaths of 1,221 people, mostly civilians, according to an AFP tally based on official Israeli figures.